Lower than two years in the past, the launch of ChatGPT began a generative AI frenzy. Some stated the know-how would set off a fourth Industrial Revolution, fully reshaping the world as we all know it.

Lower than two years in the past, the launch of ChatGPT began a generative AI frenzy. Some stated the know-how would set off a fourth Industrial Revolution, fully reshaping the world as we all know it.

In March 2023, Goldman Sachs predicted 300 million jobs could be misplaced or degraded attributable to AI. An enormous shift gave the impression to be below manner.

Eighteen months later, generative AI will not be reworking enterprise. Many tasks utilizing the know-how are being cancelled, corresponding to an try by McDonald’s to automate drive-thru ordering which went viral on TikTok after producing comical failures. Authorities efforts to make programs to summarise public submissions and calculate welfare entitlements have met the identical destiny.

So, what occurred?

The AI hype cycle

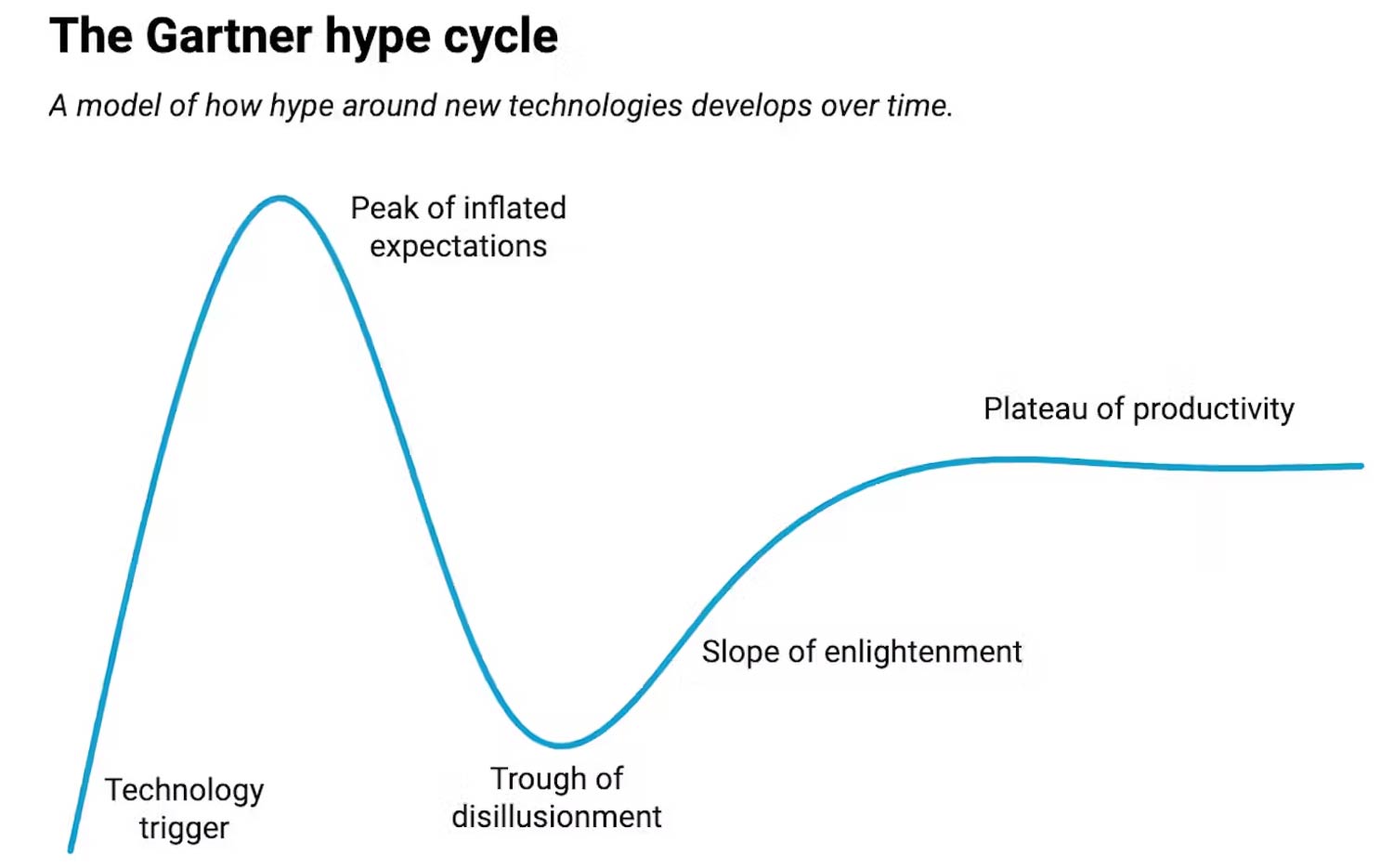

Like many new applied sciences, generative AI has been following a path referred to as the Gartner hype cycle, first described by American tech analysis agency Gartner.

This extensively used mannequin describes a recurring course of during which the preliminary success of a know-how results in inflated public expectations that ultimately fail to be realised. After the early “peak of inflated expectations” comes a “trough of disillusionment”, adopted by a “slope of enlightenment” which ultimately reaches a “plateau of productiveness”.

A Gartner report printed in June listed most generative AI applied sciences as both on the peak of inflated expectations or nonetheless going upward. The report argued most of those applied sciences are two to 5 years away from turning into absolutely productive.

Many compelling prototypes of generative AI merchandise have been developed however adopting them in observe has been much less profitable. A research printed final week by American suppose tank Rand confirmed 80% of AI tasks fail, greater than double the speed for non-AI tasks.

Shortcomings

Shortcomings

The Rand report lists many difficulties with generative AI, starting from excessive funding necessities in knowledge and AI infrastructure to a scarcity of wanted human expertise. Nevertheless, the weird nature of gen AI’s limitations represents a crucial problem.

For instance, generative AI programs can resolve some extremely complicated college admission checks but fail quite simple duties. This makes it very arduous to guage the potential of those applied sciences, which ends up in false confidence.

In any case, if it may resolve complicated differential equations or write an essay, it ought to have the ability to take easy drive-through orders, proper?

A latest research confirmed that the skills of enormous language fashions corresponding to GPT-4 don’t all the time match what individuals count on of them. Specifically, extra succesful fashions severely underperformed in high-stakes circumstances the place incorrect responses could possibly be catastrophic.

These outcomes recommend these fashions can induce false confidence of their customers. As a result of they fluently reply questions, people can attain overoptimistic conclusions about their capabilities and deploy the fashions in conditions they don’t seem to be fitted to.

Expertise from profitable tasks reveals it’s robust to make a generative mannequin observe directions. For instance, Khan Academy’s Khanmigo tutoring system usually revealed the right solutions to questions regardless of being instructed to not.

So why isn’t the generative AI hype over but? There are a couple of causes for this.

First, generative AI know-how, regardless of its challenges, is quickly bettering, with scale and measurement being the first drivers of the development.

Improved fashions

Analysis reveals that the scale of language fashions (variety of parameters), in addition to the quantity of information and computing energy used for coaching all contribute to improved mannequin efficiency. In distinction, the structure of the neural community powering the mannequin appears to have minimal influence.

Giant language fashions additionally show so-called emergent skills, that are sudden skills in duties for which they haven’t been educated. Researchers have reported new capabilities “rising” when fashions attain a particular crucial “breakthrough” measurement.

Research have discovered sufficiently complicated giant language fashions can develop the flexibility to motive by analogy and even reproduce optical illusions like these skilled by people. The exact causes of those observations are contested, however there isn’t a doubt giant language fashions have gotten extra subtle.

So, AI corporations are nonetheless at work on greater and costlier fashions, and tech corporations corresponding to Microsoft and Apple are betting on returns from their present investments in generative AI. In response to one latest estimate, generative AI might want to produce US$600-billion in annual income to justify present investments – and this determine is more likely to develop to $1-trillion within the coming years.

For the second, the largest winner from the generative AI growth is Nvidia, the most important producer of the chips powering the AI arms race. Because the proverbial shovel-makers in a gold rush, Nvidia just lately grew to become essentially the most precious public firm in historical past, tripling its share value in a single 12 months to succeed in a valuation of $3-trillion in June.

For the second, the largest winner from the generative AI growth is Nvidia, the most important producer of the chips powering the AI arms race. Because the proverbial shovel-makers in a gold rush, Nvidia just lately grew to become essentially the most precious public firm in historical past, tripling its share value in a single 12 months to succeed in a valuation of $3-trillion in June.

What comes subsequent?

Because the AI hype begins to deflate and we transfer by the interval of disillusionment, we’re additionally seeing extra practical AI adoption methods.

- First, AI is getting used to assist people, fairly than substitute them. A latest survey of American corporations discovered they’re primarily utilizing AI to enhance effectivity (49%), cut back labour prices (47%) and improve the standard of merchandise (58%).

- Second, we additionally see a rise in smaller (and cheaper) generative AI fashions, educated on particular knowledge and deployed regionally to scale back prices and optimise effectivity. Even OpenAI, which has led the race for ever-larger fashions, has launched the GPT-4o Mini mannequin to scale back prices and enhance efficiency.

- Third, we see a robust concentrate on offering AI literacy coaching and educating the workforce on how AI works, its potentials and limitations, and greatest practices for moral AI use. We’re more likely to should study (and re-learn) how one can use completely different AI applied sciences for years to return.

In the long run, the AI revolution will look extra like an evolution. Its use will steadily develop over time and, little by little, alter and rework human actions. Which is significantly better than changing them.![]()

- The writer, Vitomir Kovanovic, is senior lecturer in studying analytics, College of South Australia

- This text is republished from The Dialog below a Inventive Commons licence